When JPMorgan Chase launched its LLM Suite platform in summer 2024, something unusual happened: within eight months, 200,000 employees were using it daily. No mandate. No compliance requirement. Just organic adoption at a scale that most enterprises can only dream about.

Meanwhile, at most other organizations, a very different story was playing out. MIT’s 2025 “GenAI Divide” report, based on 150 executive interviews and 300 public AI deployments, found that 95% of generative AI pilots fail to deliver rapid revenue acceleration. Not 50%. Not even 80%. Ninety-five percent.

The gap between JPMorgan and everyone else isn’t about technology, talent, or even budget. It’s about something far more fundamental: whether you can prove your AI is working.

The Measurement Gap Is the Real Pilot Killer

Enterprise AI has an accountability problem. Organizations are spending aggressively — global generative AI investment tripled to roughly $37 billion in 2025 — but most cannot answer a simple question: What’s the ROI on our AI?

The numbers tell a stark story. McKinsey’s State of AI 2025 report found that 88% of organizations now use AI regularly in at least one business function. Yet only 6% qualify as “AI high performers” who can attribute more than 5% of total EBIT to AI. The other 82% are running AI, but they cannot connect it to business results.

Deloitte’s State of AI in the Enterprise 2026 survey — covering 3,000 director-to-C-suite leaders across 24 countries — revealed what might be the most telling statistic of all: 74% of organizations want AI to grow revenue, but only 20% have actually seen it happen. That’s not a technology gap. That’s a measurement gap.

Why “Pilot Purgatory” Is Getting Worse, Not Better

You might expect the pilot-to-production problem to improve as AI matures. It’s not. S&P Global data shows that 42% of companies abandoned most of their AI initiatives in 2025, more than double the 17% abandonment rate just one year earlier. The average enterprise scrapped 46% of AI pilots before they ever reached production. For every 33 prototypes built, only 4 made it into production — an 88% failure rate at the scaling stage.

The pattern is consistent: organizations launch pilots with enthusiasm, run them for three to six months, then struggle to justify continued investment. Without baseline metrics established before deployment, there’s no way to quantify what AI actually changed. Without ongoing measurement, there’s no way to distinguish a successful pilot from an expensive experiment. And without clear ROI data, there’s no executive willing to sign off on scaling.

Gartner reinforced this trajectory in June 2025, predicting that over 40% of agentic AI projects will be canceled by the end of 2027, citing three drivers: escalating costs, unclear business value, and inadequate risk controls. The emphasis on “unclear business value” is telling — it’s not that the AI doesn’t work, it’s that nobody built the infrastructure to prove that it does.

What the 5% Do Differently

The companies that successfully move AI from pilot to production share a pattern that has nothing to do with having better models or bigger datasets. They build measurement into the process from day one.

JPMorgan didn’t just deploy AI — they tracked adoption rates, time savings, and productivity gains from the first week. Their AI benefits are growing 30-40% annually, and they know this because they measure it. Walmart didn’t just experiment with AI in their supply chain — they documented that route optimization eliminated 30 million unnecessary delivery miles and avoided 94 million pounds of CO2 emissions. Their customer service AI cut problem resolution times by 40%, a number they can report because they established baselines before deployment.

This is the pattern MIT’s research confirmed across hundreds of deployments: the companies that scale AI successfully are the ones that treat measurement as infrastructure, not an afterthought. They know which processes AI is accelerating, by how much, and at what cost. They can calculate the total cost of ownership — including the API costs, engineering time, and maintenance burden that most organizations bury in IT budgets. And they can present executives with a clear picture: here’s what AI costs, here’s what it delivers, and here’s why scaling it makes financial sense.

The Four Phases of Scaling (and Where Most Organizations Get Stuck)

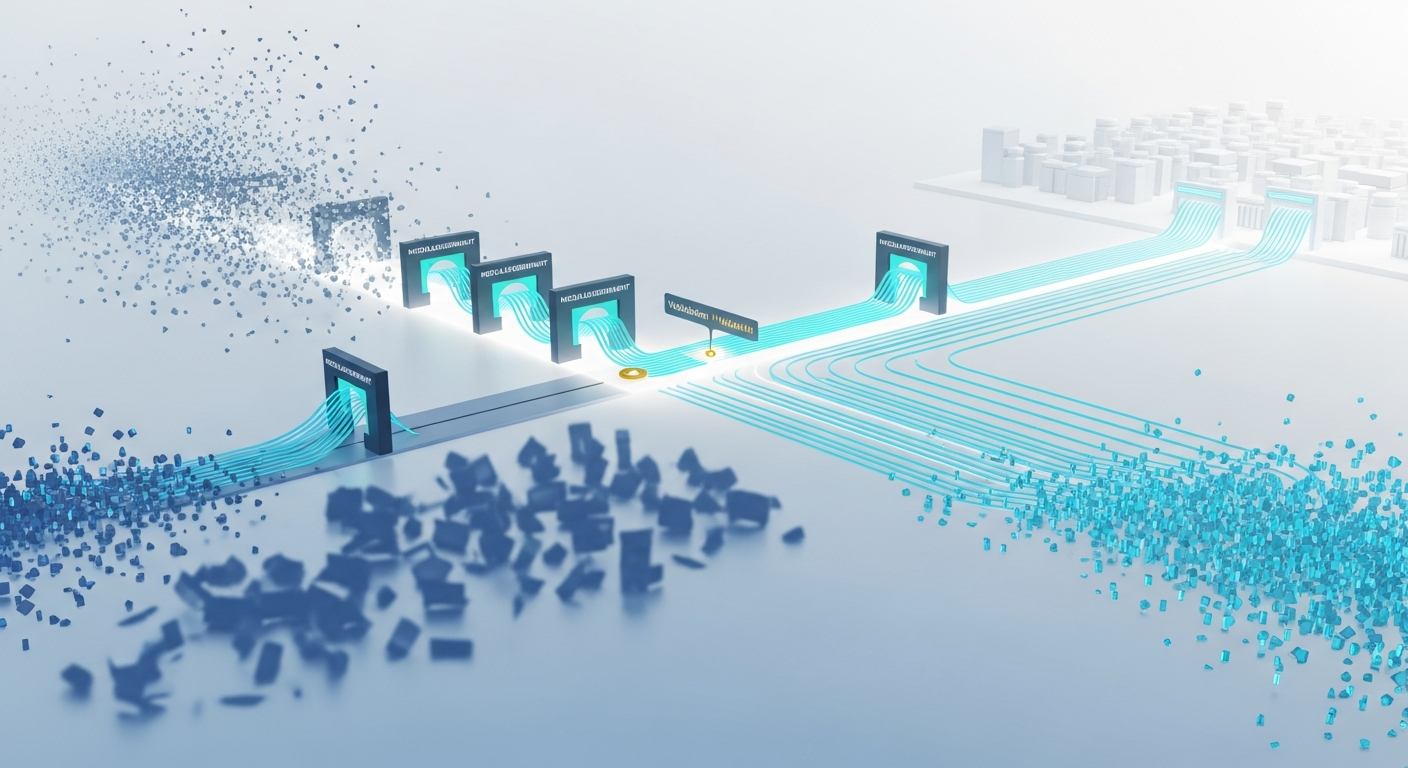

Successfully moving AI from pilot to production typically follows four phases, each gated by measurement milestones rather than arbitrary timelines.

Phase 1: Validate value (weeks 1-4). Deploy the AI solution with a small group and establish clear baselines. What does the process look like without AI? How long does it take? What does it cost? What’s the error rate? Without these pre-AI measurements, you’ll never be able to quantify impact. Most organizations skip this step entirely and then wonder why they can’t prove ROI six months later.

Phase 2: Harden for production (weeks 5-10). Once you have evidence that the AI delivers measurable value, build the governance and monitoring infrastructure needed for scale. This means policy enforcement, access controls, audit trails, and cost tracking. It also means ensuring someone owns ongoing operations — not as a side project, but as a defined responsibility.

Phase 3: Controlled expansion (weeks 11-16). Roll out to a broader group while continuing to measure. Are the gains from Phase 1 holding at scale? Are costs scaling linearly or exponentially? Are new user segments finding different use cases? This phase is where many organizations discover that their pilot’s curated dataset doesn’t translate to messy real-world data — Gartner found that data quality issues derail 85% of AI projects at this stage.

Phase 4: Full deployment and continuous optimization. With validated ROI data from the first three phases, you have the evidence to justify enterprise-wide investment. But the measurement doesn’t stop — it shifts from proving value to optimizing it. Which teams are getting the most benefit? Where are costs disproportionate to returns? What new use cases are emerging?

The organizations that stall are almost always stuck between Phase 1 and Phase 2. They ran a pilot, it “seemed to work,” but they never established the baselines or tracking needed to prove it. So the pilot sits in limbo — too promising to kill, too unproven to scale.

Buy vs. Build: A Measurement Shortcut

MIT’s research uncovered a surprising finding about the build-versus-buy decision. Purchasing AI tools from specialized vendors and building partnerships succeeds roughly 67% of the time, while internal builds succeed only about 22% of the time. The gap is striking, and measurement is a significant part of the explanation.

Specialized vendors have already solved the measurement problem for their specific domain. They’ve established the benchmarks, built the tracking, and validated the ROI across hundreds of customers. When an enterprise buys rather than builds, they’re importing not just the technology but the measurement framework that proves it works.

Internal builds, by contrast, require organizations to solve two problems simultaneously: making the AI work and building the infrastructure to prove it works. Most teams focus entirely on the first problem and neglect the second.

From Science Experiment to Business Case

Harvard Business Review captured the core challenge in November 2025: “Most AI initiatives fail not because the models are weak, but because organizations aren’t built to sustain them.” Their five-part framework for scaling AI emphasizes that the bottleneck is organizational, not technical — and at the center of every organizational bottleneck is the inability to prove value.

The path from pilot to production isn’t about better technology. It’s about building the measurement infrastructure that turns an AI experiment into a business case. That means establishing baselines before deployment, tracking outcomes continuously, calculating total cost of ownership honestly, and presenting results in terms executives care about: revenue impact, cost reduction, risk mitigation, and time to value.

Without that measurement layer, every AI pilot is a science experiment. And enterprises don’t scale science experiments — they scale proven investments.

Ready to move your AI from pilot to production? Schedule a demo to see how Olakai helps enterprises measure AI ROI, govern risk, and scale what works across every AI tool and team.